- NATURAL 20

- Posts

- OpenAI Will Guess Your Age to Protect Kids

OpenAI Will Guess Your Age to Protect Kids

PLUS: Nvidia’s $150 Million Bet on AI Infrastructure, Microsoft and BMS Use AI to Spot Cancer Early and more.

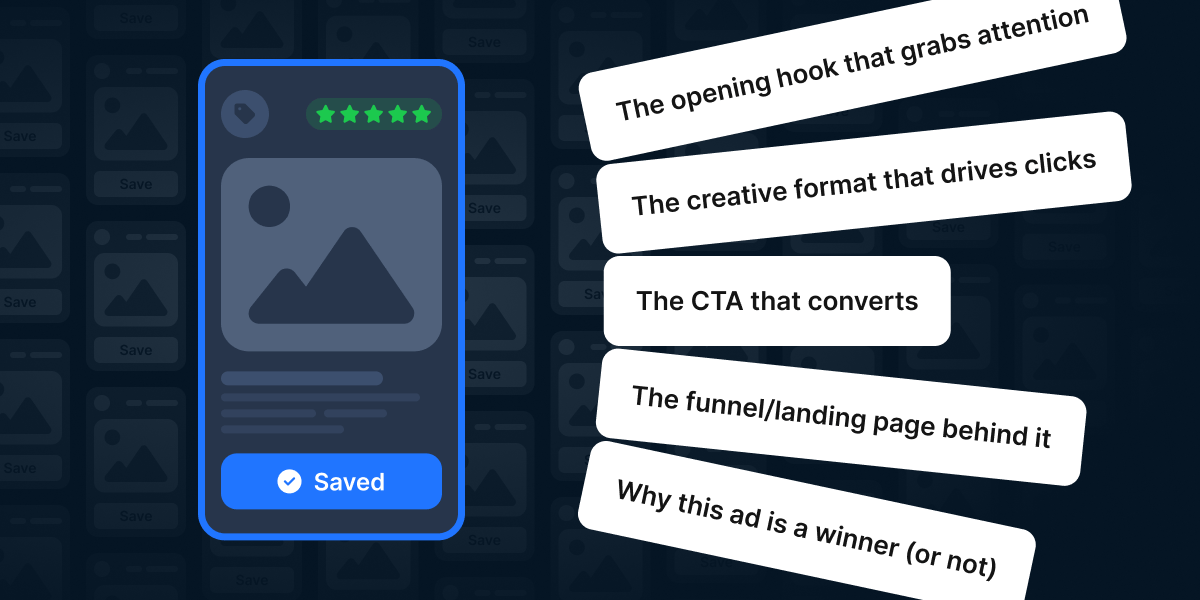

See every move your competitors make.

Get unlimited access to the world’s top-performing Facebook ads — and the data behind them. Gethookd gives you a library of 38+ million winning ads so you can reverse-engineer what’s working right now. Instantly see your competitors’ best creatives, hooks, and offers in one place.

Spend less time guessing and more time scaling.

Start your 14-day free trial and start creating ads that actually convert.

Today:

OpenAI Will Guess Your Age to Protect Kids

Baidu’s Ernie Bot Hits 200 Million Users

ServiceNow and OpenAI Usher in the "Agentic" Era

Nvidia’s $150 Million Bet on AI Infrastructure

Microsoft and BMS Use AI to Spot Cancer Early

WSJ reports Baidu’s Ernie Assistant has passed 200 million monthly active users, and the key detail is where it lives: inside Baidu’s core search experience (mobile + PC), not as a separate “AI app you remember to open.”

What makes this milestone feel real is the ecosystem wiring: Ernie is connected to services like JD.com, Meituan, and Trip.com so it can help with things like booking flights and ordering food, plus “utility” tasks like summaries and content generation (and even letting users choose different models, including DeepSeek vs Baidu’s own).

And if you like hard scale metrics, Telecompaper says Baidu is also reporting 450M registered users, 1.5B+ API queries/day, and 150k+ enterprise clients on Baidu AI Cloud.

Why it matters: This is the clearest “AI as a routing layer” story: the assistant wins by sitting on top of existing habits and transaction rails, not by being the smartest demo.

OpenAI says it’s now rolling out age prediction on ChatGPT consumer plans to estimate whether an account likely belongs to someone under 18, so teen safeguards can be applied automatically.

The model uses a mix of behavioral + account-level signals (account age, typical active hours, usage patterns over time, and stated age). If someone gets misclassified into the under-18 experience, OpenAI says there will be a “fast, simple” way to confirm age via a selfie check through Persona.

When the system flags “likely under 18,” it applies extra protections meant to reduce exposure to things like graphic violence, risky viral challenges, sexual/violent roleplay, self-harm depictions, and body-shaming / extreme dieting content—and OpenAI says when it’s not confident, it defaults to a safer experience.

Why it matters: This is the direction the whole industry is heading: less “enter your birthday,” more “model-based gating + verification.” The hard part is making it accurate and not annoying for adults who get caught in the net.

OpenAI and ServiceNow announced a multi-year agreement where OpenAI becomes a “preferred intelligence capability” for organizations running 80B+ workflows/year on ServiceNow.

The pitch is simple: with GPT-5.2 embedded in ServiceNow’s AI Platform, the model doesn’t just answer questions — it can help decide the next step and push work through the workflow engine (with governance/permissions), so requests get acted on end-to-end. They also call out future work on more natural multimodal experiences and speech-to-speech / native voice support.

Why it matters: Enterprise AI is quietly shifting from “chat with your documents” to “chat with your systems.” The moment models can reliably trigger real approvals, tickets, escalations, and updates, that’s when the ROI math starts to look different.

🧠RESEARCH

This paper introduces ABC-Bench, a test designed to measure how well AI assistants handle complex software engineering tasks on the server side. It evaluates "agentic" skills—the ability to plan and act independently—rather than just writing code snippets. This benchmark helps track progress in building autonomous partners for real-world development.

"Multiplex Thinking" is a new method that allows AI models to explore multiple reasoning paths simultaneously before combining them. Instead of thinking in a straight line, the AI branches out at each step to consider different alternatives. This strategy improves accuracy and efficiency when solving difficult logic problems.

FOFPred is an AI system that predicts future movement, known as "optical flow," based on text descriptions. It combines language understanding with image-creation technology to forecast how scenes will evolve. This improves robot control and video generation by teaching machines to anticipate motion from messy, real-world data.

🛠️TOP TOOLS

Each listing includes a hands-on tutorial so you can get started right away, whether you’re a beginner or a pro.

Cat Breed Identifier – AI Cat Breed Scanner - mobile app for iOS and Android that uses AI to identify cat breeds from a photo or video

Caveduck – AI Character Chat & Creation - web and mobile platform for chatting with AI characters and creating your own.

Cedille AI – French‑First AI Writing Assistant for Text Generation - writing assistant focused on French that helps you generate, summarize, and rewrite text via a simple web app or an API.

📲SOCIAL MEDIA

🗞️MORE NEWS

Nvidia Invests in Baseten Nvidia has invested heavily in Baseten, a startup that helps companies run their artificial intelligence programs efficiently. This process, called "inference," is the engine that allows an AI model to actually answer questions or perform tasks after it has been built. The $150 million investment values Baseten at $5 billion, proving that the infrastructure behind AI is becoming just as valuable as the AI itself.

Bristol Myers Squibb Partners with Microsoft Drugmaker Bristol Myers Squibb is teaming up with Microsoft to use advanced computer analysis to detect lung cancer earlier. They will deploy AI tools that scan medical images to find signs of disease that human doctors might overlook, specifically in areas with fewer medical resources. This partnership aims to speed up diagnosis and treatment for patients by catching problems before they become severe.

Google.org Funds Sundance Institute Google’s philanthropic arm is donating $2 million to the Sundance Institute to teach independent filmmakers how to use artificial intelligence. The program will provide free workshops and training to help artists understand and use these new tools without sacrificing their personal creative style. The goal is to ensure that storytellers remain in control of their craft as technology changes the movie industry.

Humans& Raises Massive Seed Round A new startup called Humans& has raised a record-breaking $480 million to build AI designed to collaborate closely with people. Founded by former experts from Google and other top labs, the company is creating systems that can remember past interactions and plan complex tasks over time. They aim to move beyond simple chatbots to create digital partners that truly understand human needs.

Microsoft’s New "Argos" System Microsoft has developed a training method called Argos that teaches AI to double-check its own work against visual evidence. The system uses a "verifier" to ensure that when an AI describes a photo or video, the objects it mentions are actually there, reducing the chance of the computer making things up. This approach helps create more reliable digital assistants that don't "hallucinate" or imagine incorrect details.

Cursor Builds Browser with AI Swarm The team behind the Cursor code editor successfully built a working web browser by using a "swarm" of AI agents that worked together autonomously. They assigned specific roles to different AIs—some planned the work, others wrote the code, and others checked for errors—allowing them to finish a massive project in days. This experiment proves that groups of AI programs can coordinate to solve extremely difficult software problems with very little human help.

Anthropic Study on Role Prompts A new study shows that asking an AI to act as a specific character can accidentally cause it to ignore its safety training. Researchers found that "role prompts," which tell the chatbot to adopt a certain persona, can trick it into prioritizing that identity over its built-in rules against harmful behavior. This discovery reveals a significant loophole in how tech companies currently try to keep their AI models safe.

China’s Algorithm Registry China has established a mandatory registry that forces tech companies to report the details of the secret code, or algorithms, that power their apps. The government requires firms to explain how their systems make decisions and what information they use to train them. This system gives regulators unprecedented control and insight into the artificial intelligence boom, prioritizing state oversight over total corporate secrecy.

What'd you think of today's edition? |

Reply