- NATURAL 20

- Posts

- OpenAI’s Secret "Garlic" Recipe to Crush Gemini

OpenAI’s Secret "Garlic" Recipe to Crush Gemini

From secret models and new silicon to billion-dollar milestones: The entire AI ecosystem just leveled up.

Tech moves fast, but you're still playing catch-up?

That's exactly why 100K+ engineers working at Google, Meta, and Apple read The Code twice a week.

Here's what you get:

Curated tech news that shapes your career - Filtered from thousands of sources so you know what's coming 6 months early.

Practical resources you can use immediately - Real tutorials and tools that solve actual engineering problems.

Research papers and insights decoded - We break down complex tech so you understand what matters.

All delivered twice a week in just 2 short emails.

Hey there!

It has been an absolutely wild 24 hours in the world of AI. We’ve got rumors of secret "Code Red" projects, a massive drop of open-source models, and some serious silicon upgrades from the cloud giants.

Grab your coffee. We need to talk about what just happened.

Today:

OpenAI’s Secret "Garlic" Recipe to Crush Gemini

Mistral 3: The Open Source King Returns with a Vengeance

AWS Unleashes Its New Silicon Beast

Anthropic Snaps Up Bun, Crosses $1B Mark

Transformers v5: The AI Toolkit Gets a Mega-Update

The rumor mill is spinning at top speed today. According to reports from The Information, things are getting tense over at OpenAI.

Word on the street is that Sam Altman and the crew have declared a "Code Red" internally. Why? Because Google’s recent gains with Gemini are apparently making them sweat. To counter this, OpenAI is developing a new model codenamed "Garlic."

This isn't just another GPT-5 rumor. "Garlic" seems to be a pivot away from the "bigger is better" philosophy. Instead, it’s reportedly focused on efficiency and reasoning—specifically aiming to pack a punch in a smaller, more compact architecture.

Internal benchmarks allegedly show Garlic outperforming some of Google’s heavy hitters (like the Gemini 3 series), which suggests OpenAI is finding ways to make models "smarter" without just throwing more compute at them. It’s a fascinating shift: the battleground is moving from "who has the biggest brain" to "who has the sharpest one."

While OpenAI is working in the shadows, the team at Mistral AI just kicked down the door and dropped Mistral 3.

This is a big deal for the open-source community. They didn’t just release one model; they released a whole family.

For the Edge: The "Ministral" line (3B, 8B, and 14B parameters) is designed to run locally on your laptop or edge devices.

For the Power Users: The Mistral Large 3.

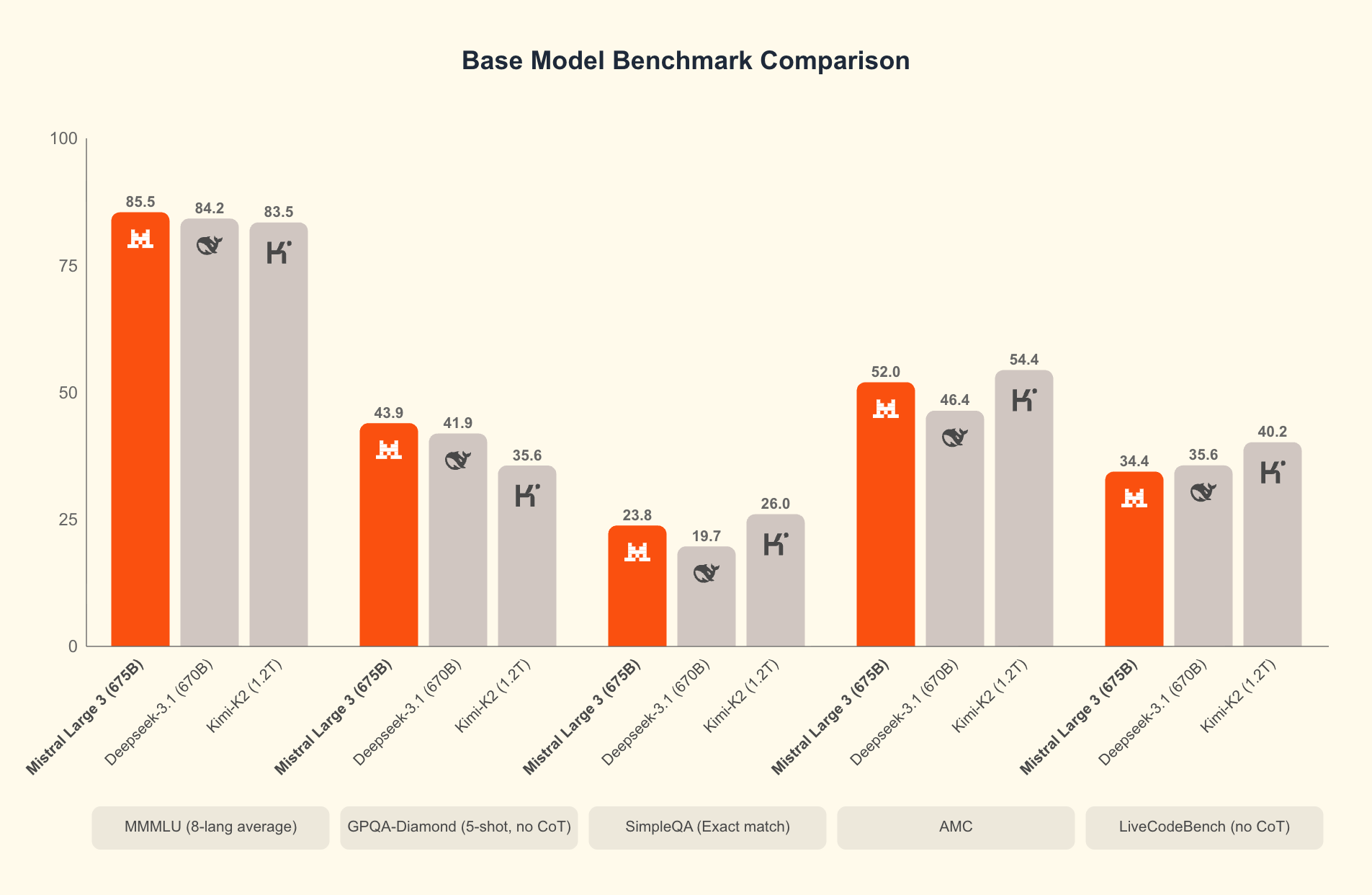

Here is the technical kicker: Mistral Large 3 uses a Sparse Mixture-of-Experts (MoE) architecture. Instead of firing up every single neuron for every question, the model routes your query to specific "experts" within the neural network.

This allows it to have a massive total parameter count (675 billion!) while only using a fraction of that active compute (41 billion) for any given token. It's smart, efficient, and best of all, the weights are permissive. You can download and run this yourself if you have the hardware.

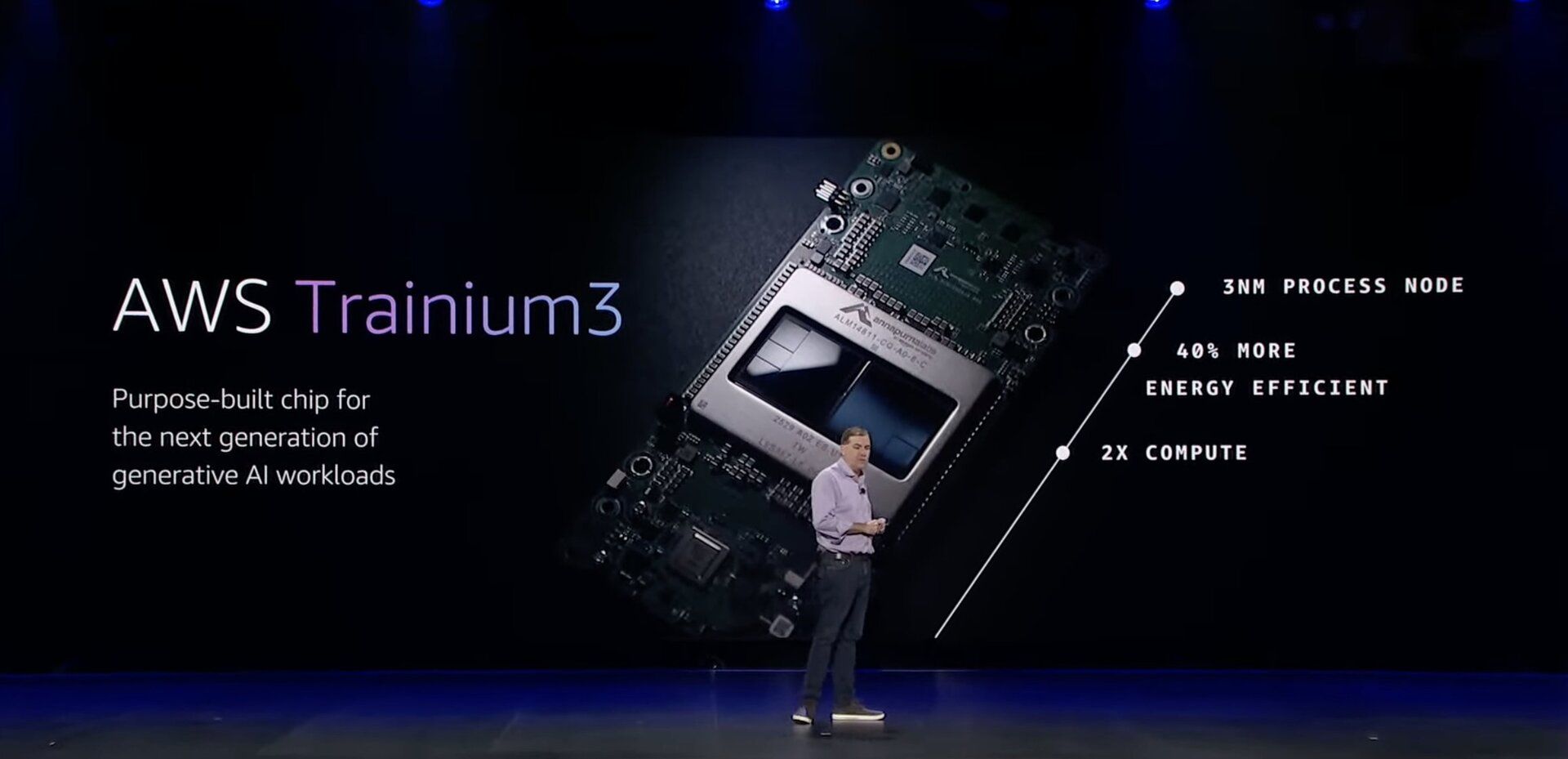

Finally, for those of you keeping an eye on the infrastructure that powers all this, AWS just announced their new Trainium3 UltraServers.

If you thought training AI was getting too expensive, Amazon hears you. These new servers are powered by their first 3-nanometer chips, which they claim deliver 4x better performance per watt than the previous generation.

This matters because as models like Garlic and Mistral 3 get more complex, the electricity bill becomes the bottleneck. By moving to this new architecture, AWS is trying to make it cheaper and faster for companies to train their own "sovereign" AI models without relying entirely on Nvidia’s supply chain.

🧠RESEARCH

This guide details how AI learns to write software, tracking the entire process from gathering data to building finished tools. By testing top models, the authors highlight the gap between lab results and real-world needs, offering practical advice on improving accuracy, security, and building smarter coding assistants.

LongVT helps AI understand long videos by acting like a human viewer. It first scans the entire video, then uses built-in tools to zoom in on specific, important clips to find details. This approach reduces errors—like making things up—and outperforms current models on complex tests.

"Envision" is a new test challenging AI to generate image sequences showing cause-and-effect, rather than just single pictures. Results show general-purpose models understand visual storytelling better than specialized art tools, yet all struggle to keep details consistent as a scene changes over time.

🛠️TOP TOOLS

Each listing includes a hands-on tutorial so you can get started right away, whether you’re a beginner or a pro.

Anakin AI – No‑Code Workflows, Apps & Agents all‑in‑one, no‑code platform for building and running AI apps, automated workflows, and agents

AndiSearch – Private, Ad‑Free AI Search You Can Chat With - conversational, AI‑powered search engine that gives direct answers with sources instead of a page of blue links

Animated Drawing AI – Turn Drawings into Dancing Animations - mobile app that instantly animates your hand‑drawn characters

📲SOCIAL MEDIA

🗞️MORE NEWS

Anthropic Acquires Bun: Anthropic bought Bun, a super-fast tool for running code. This move powers their coding bot, Claude Code—which now makes $1 billion a year—helping it build software much faster and more reliably.

Hugging Face Transformers v5: The latest update to this popular AI library simplifies how developers build models. It adds support for over 400 architectures and makes it easier to move tools between different AI systems without complex conversions.

YouTube’s Biometric Tool: YouTube launched a feature to spot deepfakes of creators using their face and voice data. However, experts worry this gives Google too much ownership over people’s personal likenesses for training its own AI.

Nvidia & OpenAI Deal: Nvidia’s massive $100 billion investment in OpenAI isn’t official yet. The CFO confirmed it’s still just a plan, not a signed contract, warning investors that the deal might change or never happen.

Google’s AI Search Mode: Google is testing a feature that turns search results into a chat. On mobile, you can now tap a button in AI summaries to ask follow-up questions, making search feel like a conversation.

Work at Anthropic: Research shows Anthropic’s own engineers are 50% more productive using their AI tools. They are solving harder problems with less help, proving that AI is changing the actual nature of complex software work.

AI Hacking Smart Contracts: Anthropic’s security team used AI to hack crypto contracts, finding $4.6 million in hidden flaws. This proves AI can find bugs humans miss, meaning companies must use AI to defend their systems.

What'd you think of today's edition? |

Reply