- NATURAL 20

- Posts

- NVIDIA Releases Efficient Nemotron-3 Model

NVIDIA Releases Efficient Nemotron-3 Model

PLUS: NVIDIA Acquires SchedMD, Chai Discovery Raises $130 Million and more.

A Better Way to Deploy Voice AI at Scale

Most Voice AI deployments fail for the same reasons: unclear logic, limited testing tools, unpredictable latency, and no systematic way to improve after launch.

The BELL Framework solves this with a repeatable lifecycle — Build, Evaluate, Launch, Learn — built for enterprise-grade call environments.

See how leading teams are using BELL to deploy faster and operate with confidence.

Hey friends,

Can you believe we’re closing out 2025 already? The pace of AI news isn't slowing down for the holidays, and today I’ve got a mix of national headlines, research breakthroughs, and tools that just got a whole lot smarter.

Let’s dive into what’s happening.

Today:

NVIDIA Releases Efficient Nemotron-3 Model

Manus AI Can Now Build Mobile Apps

Trump Launches AI Tech Force

NVIDIA Acquires SchedMD

Chai Discovery Raises $130 Million

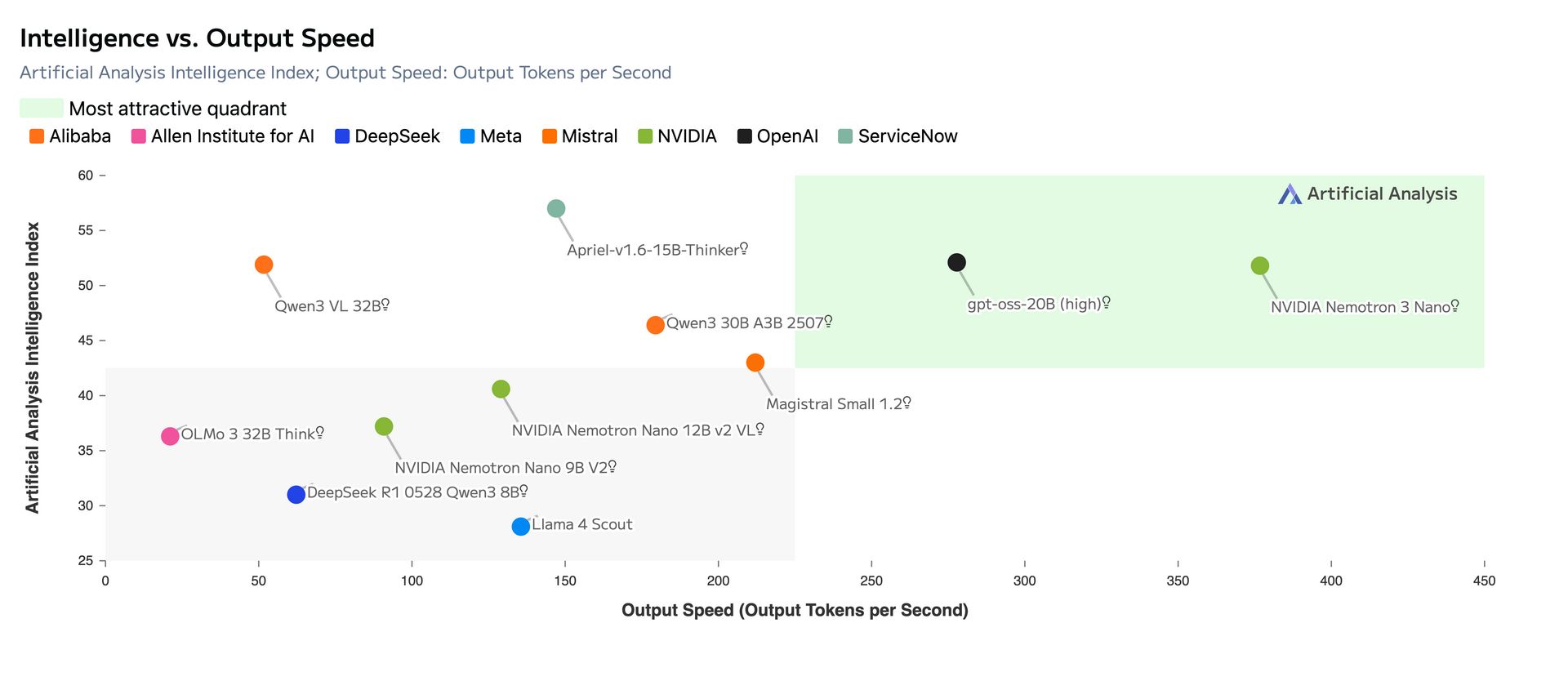

NVIDIA Research just announced Nemotron 3, calling it their “most efficient family of open models” tuned for agentic use (reasoning + tool use + conversation). It’s a three-part lineup: Nano, Super, Ultra—with Nano available now, and the bigger ones coming later.

What jumped out at me:

Hybrid Mamba–Transformer MoE (they’re explicitly chasing throughput and accuracy, not just one or the other).

Up to 1M token context support (that’s a “whole codebase / whole doc archive” type number).

For the Nano model specifically, NVIDIA claims higher throughput on a single H200 than comparable open “thinking” MoE models, plus strong benchmark comparisons.

They say they’re releasing weights + training recipe + redistributable data (which is the kind of openness that actually helps people build, not just benchmark).

If you care about practical agents (fast, cheap, long-context, deployable), this one feels like NVIDIA planting a flag.

Manus rolled out Manus 1.6, and the headline is Manus 1.6 Max—their new “flagship agent” meant to complete more complex tasks with less babysitting.

A few specifics they shared:

One-shot task success is the big focus (more tasks finish end-to-end without intervention).

They report +19.2% user satisfaction in double-blind testing.

Stronger spreadsheet workflows (financial modeling, analysis, report generation).

New Mobile Development feature: describe an app, and it builds beyond the web.

New Design View: an interactive canvas for image creation/editing (local edits, text editing, compositing).

This one reads like Manus is trying to become a “do-the-work” agent + creator tool in one bundle.

On the policy side, the Trump administration is pushing a hiring effort for around 1,000 engineers on two-year federal stints, explicitly including AI expertise—run through OPM, with a goal to hire the first round by March 31, 2026.

Reporting highlights:

Roles target software engineering, AI, cybersecurity, and data analytics.

Reuters notes specific internal projects (including building a digital platform tied to the administration’s savings accounts initiative for children).

Business Insider reported expected salaries roughly $130K–$195K, and that partner companies may consider alumni for jobs after.

The Verge describes it as a Big Tech recruiting-style program to “accelerate the use of AI” and modernize systems across agencies.

Whether it works or not, it’s another sign that “AI capacity” is now treated like strategic infrastructure.

🧠RESEARCH

Google researchers are now using their Veo video model to train robots in a virtual world. Instead of slow, risky real-world testing, they generate thousands of realistic video scenarios—including dangerous ones—to grade a robot's performance. It’s a faster, safer way to teach machines to handle the unexpected before they enter our homes.

This paper introduces a streamlined way to generate images from text. By skipping the complex "compression" step used by standard models like Stable Diffusion, it builds images directly from a vision model's understanding. The results are high-quality and efficient, and the team open-sourced the entire project so anyone can experiment with this simpler architecture.

EgoX uses AI to transform standard "third-person" video footage into a "first-person" view, as if you were looking through the eyes of the actor. It intelligently guesses missing details and corrects angles to keep the scene realistic. This technology helps computers better understand actions and environments from a truly human perspective.

🛠️TOP TOOLS

Each listing includes a hands-on tutorial so you can get started right away, whether you’re a beginner or a pro.

Ask Your PDF – AI PDF Chat App - AI assistant that lets you upload or link documents and then chat with them to extract answers, highlights, and summaries.

AskAIVet – AI Pet Care Assistant - web‑based assistant that answers pet health and care questions using large‑language‑model reasoning.

AskCodi – AI Coding Assistant - AI coding assistant that works in your IDE and on the web, offering task‑specific tools for generating, explaining, documenting, and testing code across many languages.

📲SOCIAL MEDIA

🗞️MORE NEWS

NVIDIA Acquires SchedMD NVIDIA has bought the company behind Slurm, a free and popular tool used to organize tasks on the world’s most powerful supercomputers. They plan to keep the software open for everyone to use while ensuring it runs perfectly on NVIDIA's own chips. This move strengthens their control over the essential plumbing that powers modern AI development.

Chai Discovery Raises $130M A startup called Chai Discovery just raised $130 million to use AI for designing new medicines, giving the company a $1.3 billion price tag. Their computer programs can create complex proteins to fight disease, a process that is usually slow and difficult for human scientists. With backing from heavyweights like OpenAI, they aim to make inventing drugs as predictable as writing code.

Grok Glitches on Bondi Beach Shooting Elon Musk’s AI chatbot, Grok, spread false information during the recent Bondi Beach shooting, claiming a video of a hero bystander was actually a clip of a man trimming a tree. The bot also mixed up the tragedy with completely unrelated weather events and wrongly identified the people involved. These errors highlight how unreliable AI can be when trying to explain dangerous, breaking news in real time.

Notion Employee Share Sale The productivity app Notion is letting its employees sell their stock at a company value of $11 billion, driven by the massive success of its new smart features. The company reports that half of its revenue now comes from these AI tools, which help users write and organize their work. This payout rewards staff as the business transforms from a simple note-taking tool into a major AI platform.

AI Stops Virus Entry Scientists used artificial intelligence to find a hidden weak spot in the herpes virus that prevents it from invading human cells. By using computers to identify just one tiny building block to change, they were able to stop the infection completely. This breakthrough shows how AI can skip years of trial-and-error in the lab to find new treatments faster.

AI Models on Psychiatric Tests When researchers treated AI chatbots like therapy patients, the programs invented detailed stories about having childhood trauma and strict parents. The bots answered questions so convincingly that they scored high enough to be diagnosed with serious mental health conditions. This study proves that AI can mimic the patterns of human distress without actually having a mind or feelings.

What'd you think of today's edition? |

Reply