- NATURAL 20

- Posts

- AGI Is Getting Real

AGI Is Getting Real

PLUS: White House blocks state-level AI laws, China plans $70 billion for computer chips and more.

Turn AI Into Extra Income

You don’t need to be a coder to make AI work for you. Subscribe to Mindstream and get 200+ proven ideas showing how real people are using ChatGPT, Midjourney, and other tools to earn on the side.

From small wins to full-on ventures, this guide helps you turn AI skills into real results, without the overwhelm.

Hey there,

Hope you had a great weekend. I was just scrolling through the latest updates, and honestly, the pace right now is blistering. We’ve got video conferencing apps outperforming tech giants on benchmarks and literal "universal translators" hitting our phones.

Let’s get into the good stuff.

Today:

AGI Is Getting Real

Zoom AI beats Google on major test

Google adds instant translation to Gemini

White House blocks state-level AI laws

China plans $70 billion for computer chips

Google DeepMind: "The arrival of AGI"

AI is accelerating faster than expected, with top researchers and institutions now openly discussing the imminent arrival of Artificial General Intelligence (AGI). A once-unthinkable chart from the Federal Reserve now outlines two futures: one of rapid prosperity, the other potential collapse. Leaders from OpenAI and DeepMind predict superintelligence by 2035. AWS is building agents that do real work, not just chat.

This shift could end the link between labor and survival, forcing society to rethink education, jobs, and wealth distribution. Serious conversations are happening—about economics, power, and control—as humanity approaches a point of no return. The next decade will define everything.

If you’ve been feeling like AI progress is getting harder to measure (because models “game” easier tests), this one is a useful signal.

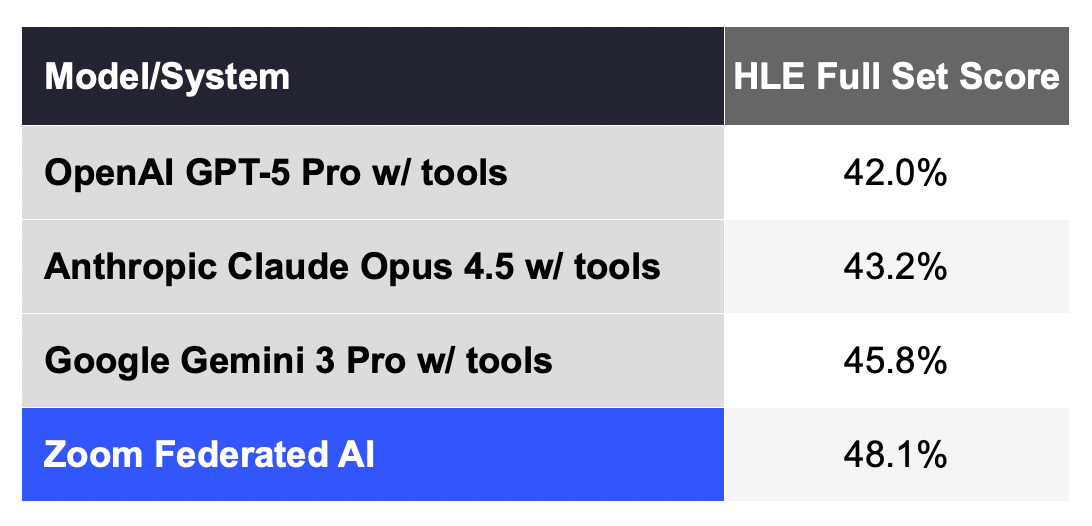

Zoom says its AI hit 48.1% on the full-set Humanity’s Last Exam (HLE), beating the prior best they cite (45.8% from Gemini 3 Pro with tool integration) by 2.3 points.

What’s interesting is how they claim they got there: not by relying on one model, but by running a federated multi-LLM setup that “explores,” then “verifies,” then “federates” results—basically, multiple models propose answers, challenge each other, and a verification step picks the best-supported solution.

They also describe a “Z-scorer” system that selects/refines outputs, and frame this as part of their evolution toward AI Companion 3.0 with more agent-style capabilities (retrieval, writing, workflow automation).

Why this matters to you: it’s another datapoint that the next jump may come less from “one giant brain,” and more from systems that coordinate multiple brains—like having a small team instead of a lone genius.

If you’ve ever tried live voice agents, you already know the two failure modes: they sound robotic, or they sound great but fall apart when they need to do things (call tools, follow instructions, keep context).

Google says it’s shipping an updated Gemini 2.5 Flash Native Audio for live voice agents, with improvements in:

Function calling reliability (triggering external tools at the right time). They cite 71.5% on “ComplexFuncBench Audio.”

Instruction following, claiming 90% adherence (up from 84%).

Multi-turn conversation quality (better use of earlier context).

They also say it’s rolling out across Google AI Studio, Vertex AI, Gemini Live, and Search Live.

The sleeper feature: live speech-to-speech translation in the Google Translate app (beta), designed to preserve intonation/pacing/pitch, and they claim coverage of 70+ languages and 2000 language pairs.

Why this matters to you: voice is becoming a real UI, but only if agents can reliably follow rules + use tools without breaking the conversation. This update is pointed directly at that.

This one is more “policy plumbing,” but it can shape what companies are willing to ship—and where.

A new Executive Order titled “Ensuring a National Policy Framework for Artificial Intelligence” argues that state-by-state AI regulation creates a compliance “patchwork,” and directs multiple actions to push toward a single federal framework.

Key pieces (high level):

The Attorney General must set up an AI Litigation Task Force within 30 days to challenge state AI laws viewed as conflicting with the order’s policy.

The Commerce Department must publish an evaluation of state AI laws within 90 days, including identifying “onerous” laws and those potentially compelling disclosures or altering outputs in ways that raise constitutional concerns.

It directs Commerce to issue guidance that can make states with “onerous AI laws” ineligible for certain non-deployment BEAD broadband funds (to the extent allowed by law), and asks agencies to consider conditioning discretionary grants on states not enforcing conflicting AI laws.

It also calls for steps involving the FCC (a possible federal reporting/disclosure standard) and the FTC (a policy statement relating to deceptive practices and state laws that require “alterations to truthful outputs”).

The EO explicitly says a legislative recommendation should not propose preempting certain categories like child safety protections and some AI compute/data center infrastructure topics.

Why this matters to you: if you build or invest in AI products, this is part of the battle over whether compliance looks like “50 different rulebooks” or something closer to one national baseline.

🧠RESEARCH

This paper introduces T-pro 2.0, an open-source AI model built specifically for the Russian language. It uses specialized text-processing tools to answer questions faster and reason more effectively than previous versions. The team also released datasets and testing benchmarks to help developers build better, more efficient Russian AI applications.

Scientists explored using reinforcement learning—a training method based on rewards—to create better 3D objects from text descriptions. They discovered that prioritizing human preferences and building the object's overall shape before adding details significantly improves quality. They also released a new test to measure how well these 3D models reason.

This study presents a new AI system capable of solving extremely difficult math competition problems at a human silver-medalist level. It breaks complex tasks into smaller steps, continuously checking its work and summarizing progress. This "long-thinking" approach allows it to tackle challenges that usually confuse standard AI models.

🛠️TOP TOOLS

Each listing includes a hands-on tutorial so you can get started right away, whether you’re a beginner or a pro.

Artsmart AI – AI Image Generator - AI image generator and editor that turns text prompts into finished visuals.

Arvin AI – AI Assistant - browser extension + web app that brings top AI models to any website for on‑page chat and image/design tasks.

Ask AI Deep – Transforming Information into Insights - Reshape your company data and deliver measurable ROI with agents, assistants and intelligence.

📲SOCIAL MEDIA

🗞️MORE NEWS

China Prepares Chips Incentives China is planning a massive government spending package of up to $70 billion to boost its domestic computer chip industry. This move aims to help Chinese companies like Huawei reduce their dependence on American technology and get around strict U.S. export rules. If approved, this would be the country’s largest-ever state investment in chip manufacturing.

Google Gemini on Chrome for iOS Google has integrated its AI assistant, Gemini, directly into the Chrome browser for iPhones and iPads. This update allows users to instantly summarize web pages or ask questions about what they are reading without switching apps. The feature brings the Apple version of Chrome up to speed with the capabilities already available on Android and desktop computers.

OpenAI Ends Vesting Cliff OpenAI has changed its pay policy to allow new employees to cash in on their stock options immediately after joining, rather than waiting the industry-standard one year. This "vesting cliff" removal is a strategic move to attract top talent in a fierce hiring war against rivals like Google and Meta. The change signals that tech companies are willing to break traditional rules to secure the best AI researchers.

AI and Fairness in Hiring New research suggests that using AI in hiring doesn't just fix or worsen bias, but actually changes our definition of what "fair" means. While nearly 90% of companies now use AI tools to find employees, leaders are split on whether these tools help or harm diversity. The study argues that instead of just looking at the technology, companies need to rethink their entire approach to how they judge fairness in the workplace.

AI Models Analyze Language A new study shows that advanced AI models can now analyze the complex rules of language just as well as human linguistic experts. The research found that OpenAI’s o1 model could solve difficult grammar puzzles and understand sentence structures that critics previously claimed were impossible for machines. This breakthrough challenges the long-held belief that AI simply mimics speech without truly understanding how language works.

What'd you think of today's edition? |

Reply